This article explains various Machine Learning model evaluation and validation metrics used for classification models.

After we develop a machine learning model we want to determine how good the model is. This can be a difficult question to answer. However, there are some standard metrics we can use. We will look at some of these metrics which can tell us how good a model is.

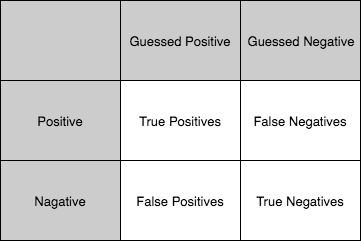

Confusion Matrix

A confusion matrix is a table describing the performance of a model. The matrix consists of four values and two dimensions. The values are:

- True Positive

- False Negative (Sometimes in literture also called a Type 2 Error)

- False Positive (Sometimes in literture also called a Type 1 Error)

- True Negative

The two dimensions are:

- True labels

- Model response

Accuracy

Accuracy is the answer to the following question.

Out of all the classifications, the model has performed, how many did we classify correctly.

Accuracy = True positive + True negative / sum of all classifications

The ratio between the number of correctly classified points and the total amount of points.

In scikit-learn you can easily calculate the accuracy by using the accuracy score function as seen below.

from sklearn.metrics import accuracy_score accuracy_score(y_true, y_pred)

The parameters y_true and y_pred are two arrays containing true labels and the predicted labels.

It won’t work

Be cautious when using Accuracy as it can be misleading. I will use an example to demonstrate this.

Let’s way we have a dataset containing transactions where 950 of the transactions are Good and 50 are fraudulent.

So what model would have good accuracy, in other words, what model would be correct most of the time.

Actually a model that classifies everything as Good transactions would receive a great accuracy, however, we all know that would be a pretty terrible and naive model. The following is the accuracy for the above case.

950 / 1000 = 95%

As seen above it can be tricky to look at accuracy and determine if a model is good, especially when the data is skewed.

What about false negative and false positives

What is worse having too many false negatives or false positives? Well, it depends on what the model is trying to solve.

Let’s look at two examples, a model that classifies emails as spam or not spam and a model that classifies patients as sick or not sick.

The model classifying emails

- False positive – Classifying a non-spam mail as spam

- False negative – Classifying a spam mail as non-spam

The model classifying patients

- False positive – Diagnosing a healthy patient as sick

- False negative – Diagnosing a sick patient as healthy

For the model classifying emails, we would like the model to have as few False positives as possible, as it would be inconvenient that non-spam emails are being sent to the spam folder. On the other hand, we can live with some spam emails in our inbox.

For the model classifying patients, we would like the model to have as few False negatives as possible, as it would be terrible to send sick patients home without treatment. On the other hand, it is ok that some healthy patients get some extra tests.

Precision and Recall

As we can see models can be fundamentally different depending on what they are solving. Therefore models can have totally different priorities. In order to measure these differences in priorities, we have two metrics that can be used. The metrics are called Precision and Recall.

Precision

The precision metric can be calculated as follows.

True positive / True positive + False Negative

In other words out of e.g. the patients that the model classified as sick, how many did the model correctly classify as sick?

Recall

The recal metric can be calculated as follows

True positive / True positive + False positive

The recall metric is kind of the opposite of Precision. Therefore for the model classifying patients as sick or not sick this would answer the question. Out of all sick patients, how many did the model correctly classify as sick?

F1 Score

We now know that models can be classified as high precision or high recall models. Depending on the goal of the model. However, it is inconvenient to always have to carry two numbers around in order to make a decision about a model. Therefore it would be nice to combine recall and precision into a single score. This can be done by simply taking the average of precision and recall. This would be a bad idea as models that have a low precision or recall would still get a high score.

A better way of calculating a single score out of precision and recall is called the harmonic mean.

Harmonic Mean

It is a mathematical fact that the harmonic mean is always less than the arithmetic mean. The harmonic mean will produce a low score when either the precision or recall is very low.

In Machine Learning model evaluation and validation, the harmonic mean is called the F1 Score.

F-1 Score = 2 * (Precision + Recall / Precision * Recall)

F-Beta Score

Even thou we now have a single score to base our model evaluation on, some models will still require to either lean towards being more precision or recall model.

For that purpose, we can use the F-Beta score.

F-Beta Score = (1+beta^2) * (Precision * Recall / beta^2 * Precision + Recall)

Finding the right beta value is not an exact science. Finding the right balance between precision and recall requires a lot of intuition about the problem to be solved and the data being used.

In scikit-learn you can easily calculate the F-Beta Score by using the fbeta score function as seen below.

from sklearn.metrics import fbeta_score fbeta_score(y_true, y_pred, beta)

The parameters y_true and y_pred are two arrays containing true labels and the predicted labels, furthermore, the parameter beta is the beta value you decide the model should have.

Roc Curve (Receiver Operating Characteristic)

Splitting the data N times and plotting the values. For each split, we calculate True positive and true negative.

The closer the value under the curve to 1 the better the model is.

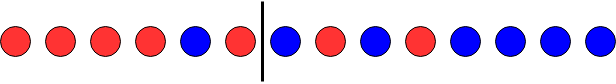

Consider a one-dimensional dataset consisting of the following 14 points.

In order to plot a ROC curve, we would need to split the data N times and calculate the True Positive Rate and False Positive Rate for each split.

True Positive Rate = True Positives / All Positives = 6/7 = 0,857

False Positive Rate = False Positives / All Negatives = 2/7 = 0,286

The N-size set of (True Positive Rate, False Positive Rate) can then be plotted.

- A random model will have a score of around 0.5

- A good model will have a score closer to 1

- A perfect model will have a score of 1

All of the above metrics are mainly focused on classification models. There are a different set of metrics which can be used for regression models.